Strategy 1: Leveraging Full Context to Address Temporal Queries

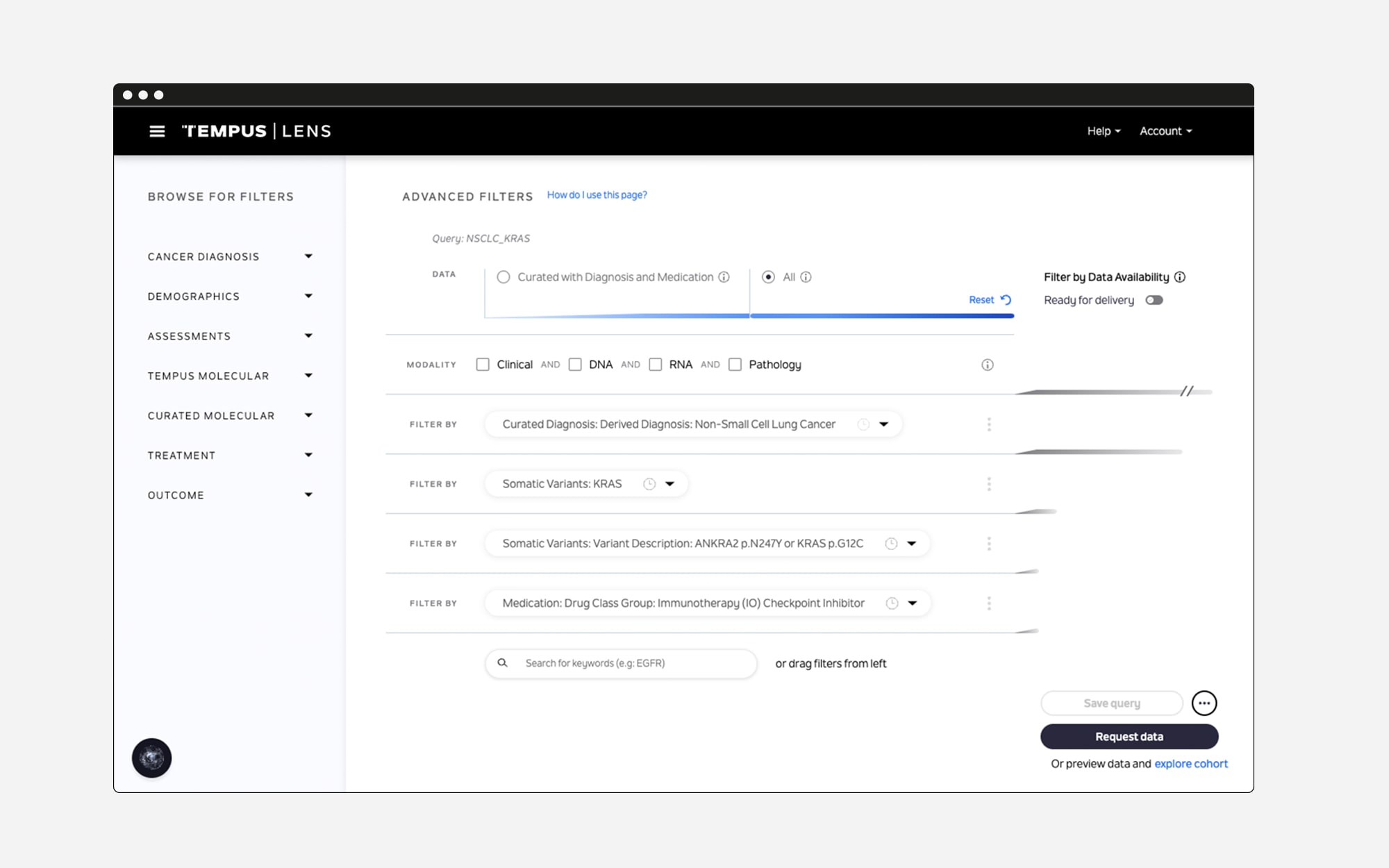

For many inclusion/exclusion criteria in clinical trial matching or care gap discovery, queries often involve temporal components. For instance, a question like, “Is this medication administered as the first line of therapy?” requires understanding the sequence of events across patient records.

With a traditional RAG approach, only documents specifically tagged as relevant to medications might be retrieved. However, this approach falls short when determining whether the medication was administered as part of first-line or second-line therapy, as the generative LLM lacks the full patient context.

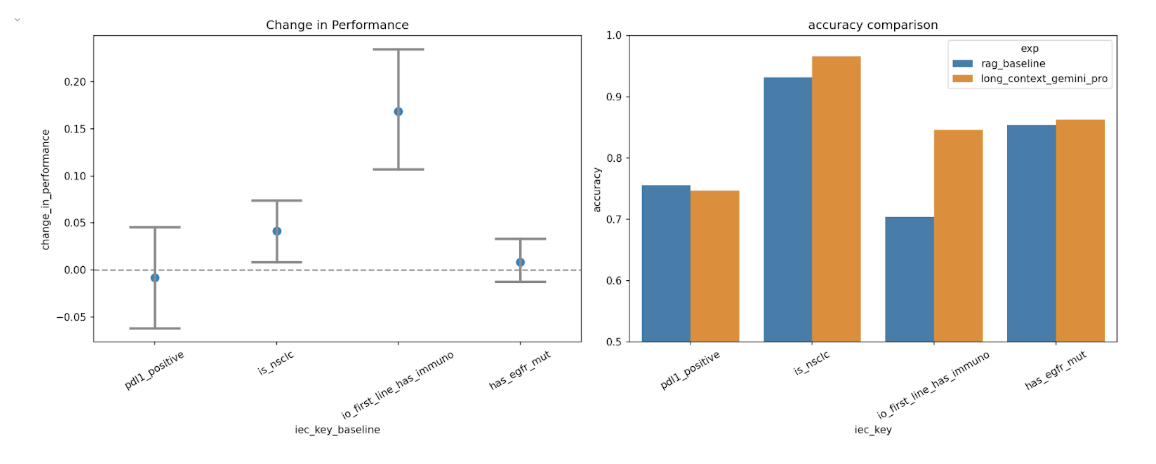

To overcome this, we’ve adopted large-context LLMs capable of processing extensive patient records. By feeding most or all patient notes into the LLM, we enable it to better understand the temporal relationships between events, delivering more accurate and contextually aware responses.

Strategy 2: Employing an Agentic Approach for Data Validation

In our initial implementation of RAG for our unstructured clinical note search workflow, the LLM was instructed to respond with “YES” if the retrieved chunks met the criteria and “NO” otherwise. However, we observed that this approach often failed when:

- Temporal questions required understanding the sequence of events (e.g., determining if event A occurred before event B).

- Insufficient query expansion led to the retrieval of incomplete or irrelevant chunks.

In many cases, the necessary information existed in other parts of the patient’s records or could be inferred from a broader dataset. To address this, we developed a hybrid solution combining RAG with a full-patient-record query approach.

The Hybrid Solution: RAG + Large Context LLMs

Our hybrid strategy integrates RAG with LLMs featuring context windows exceeding 1 million tokens (e.g., Gemini). Here’s how it works:

- Initial Query Validation: The first LLM evaluates whether the retrieved information is sufficient to make a confident determination. If it cannot confidently choose between “YES” or “NO,” it responds with “Unsure.”

- Escalation to Full Context: The “Unsure” response triggers a secondary query to a full-patient-record agent. This agent processes a much larger context to extract the necessary insights and deliver a definitive answer.

By incorporating this agentic approach, we’ve significantly improved performance while managing costs effectively. This ensures that even complex, nuanced questions — such as those involving temporal relationships or incomplete retrievals — are addressed with precision.

Conclusion

Generative AI has immense potential in healthcare, but its efficacy depends on thoughtful implementation and strategies to mitigate inherent challenges. At Tempus, we continue to innovate by combining advanced LLM capabilities with hybrid approaches like RAG + large-context queries. These strategies empower us to extract deeper insights, optimize clinical decision-making and ultimately improve patient outcomes.