Demystifying Blocks and Assemblies

Tony begins by revisiting the Lens Customer Assistant agent created in the last episode but decides to simplify to explain the fundamentals:

- Blocks: Analogous to functions in programming, blocks have inputs and outputs and perform specific operations or business logic.

- Assemblies: A collection of interconnected blocks, forming the blueprint of an AI agent. Assemblies define how data flows through the agent.

Building the Simplest Agent

To illustrate how blocks and assemblies work, Tony creates the most basic agent possible:

- Creating a Basic Agent: He sets up an agent with just two blocks—Input and Output.

- Connecting the Blocks: By directly linking the human input to the output, the agent echoes back whatever the user types.

- Understanding the Assembly: This simple setup demonstrates the core concept of assemblies, consisting of blocks connected by edges.

Result: The agent mirrors the user’s input, showcasing the simplest form of data flow in an assembly.

Introducing Intelligence with an LLM Block

To enhance the agent’s functionality, Tony introduces an LLM (Large Language Model) Block:

- Adding the LLM Block: Inserts the LLM block between the input and output blocks.

- Configuring the LLM Block:

- Model Selection: Chooses GPT-4 for advanced language capabilities.

- System Prompt: Sets a context—”You are an AI assistant. You are helping with a demo for a company video for Tempus AI. Please keep it brief but professional.”

- Connecting Inputs: Links the human message to the LLM block’s message parameter.

- Previewing the Agent: The agent now provides intelligent, context-aware responses based on user input.

Result: The agent can answer questions appropriately, demonstrating a more functional assembly.

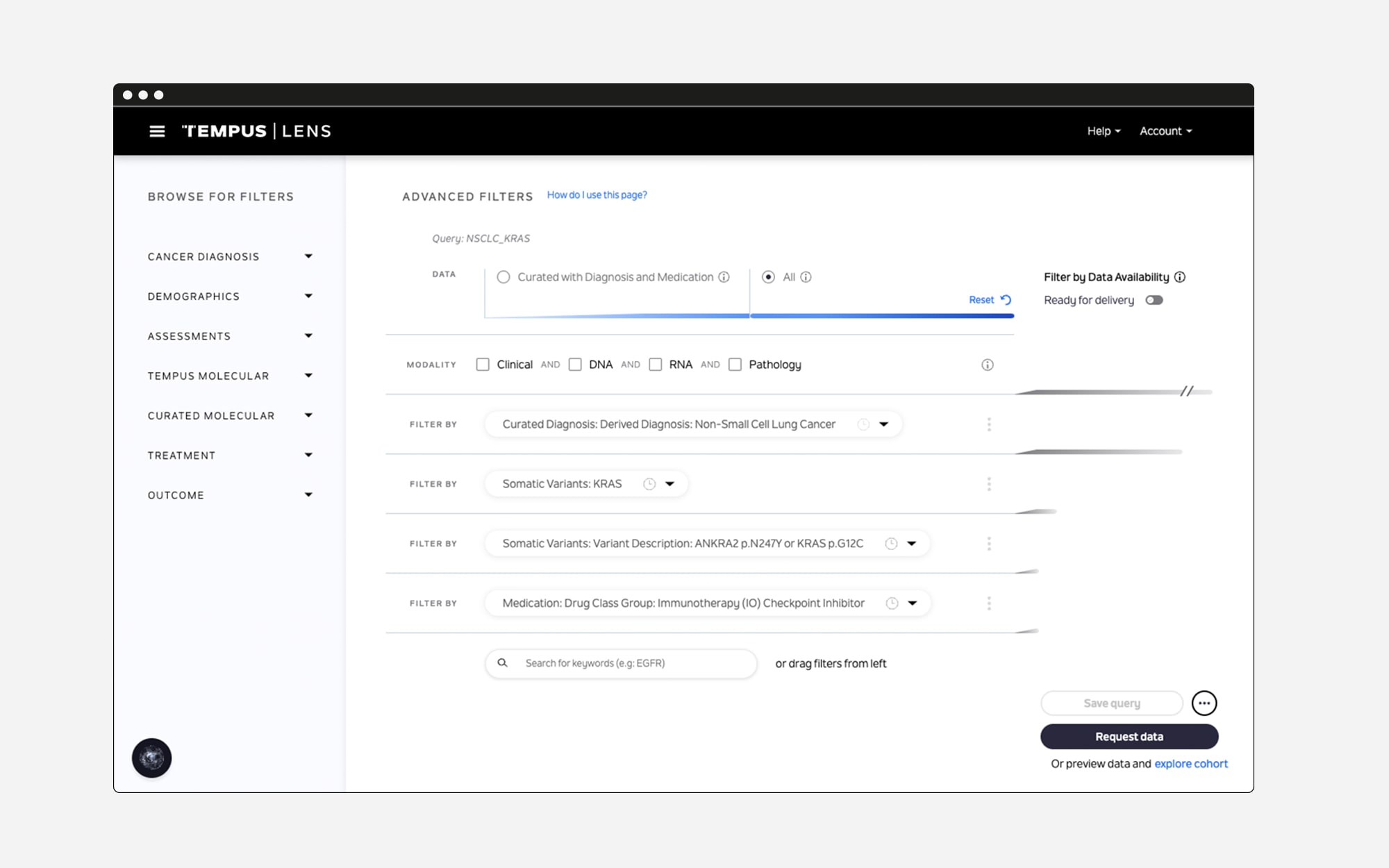

Exploring a More Complex Agent

Tony revisits the Lens Customer Assistant agent to delve into advanced features:

- Understanding the Expanded Assembly:

- The human input not only goes to the LLM block but also to additional processing blocks.

- Implementing Retrieval Augmented Generation (RAG):

- Use Relevant Documents Block: Fetches pertinent documents from a pre-built collection based on the user’s query.

- Vector Store and Embeddings: Documents are stored as mathematical representations for efficient semantic search.

- Data Merging and Context Enhancement:

- Merging Outputs: Combines the retrieved documents and their sources.

- Attachments: Feeds this merged data into the LLM block to provide context-rich responses.

- Citing Sources:

- Use Document Similarity Scoring Block: Calculates the relevance of documents to both the user’s question and the agent’s response to prioritize sources.

- User Interaction: The agent can accept and process user-uploaded documents, citing them if relevant.

Result: The agent delivers informed answers with cited sources, enhancing trust and utility.

Live Demonstration: Uploading and Citing Documents

Tony provides a practical example to showcase the agent’s capabilities:

- Uploading a Document: He uploads a random patent document to the agent.

- Agent’s Response:

- Recognizes the document is unrelated to the question.

- Continues to provide a relevant answer based on existing data.

- Displaying Sources: The agent cites relevant sources and provides direct links, demonstrating transparency.

Result: The agent intelligently handles additional inputs and maintains response quality.

The Significance of Blocks and Assemblies

- Modularity: Blocks enable the creation of reusable components, simplifying complex agent designs.

- Flexibility: Assemblies can be tailored from simple to highly intricate configurations to suit various applications.

- Transparency: Visualizing the agent’s structure aids in understanding, debugging, and optimizing performance.

Looking Ahead: Advanced Agent Architectures

Tony concludes by teasing topics for the next episode, where he’ll explore:

- Complex Agents: Building agents with multifaceted functionalities.

- Parallel Agents: Designing agents that can process multiple tasks simultaneously.

- Subagents and Recursive Agents: Creating agents within agents for hierarchical processing.

- Branching Logic Agents: Implementing decision trees within agents for dynamic responses.

These advanced concepts will demonstrate the full potential of Tempus’s Agent Builder in handling sophisticated AI tasks.

Actionable Insights

- Experiment with Agent Builder: Start with simple assemblies to grasp the fundamentals before moving to complex designs.

- Leverage Document Retrieval: Enhance your agents by integrating relevant documents to provide context-rich answers.

- Engage with Upcoming Features: Stay tuned for future episodes to learn how advanced agent architectures can revolutionize your AI applications.